Abstract

The physical memory of a real system is fragmented and contains multiple memory units, we use Virtual Memory using our Memory Management Unit (MMU) to create the illusion of a large flat, main memory. Paging is the mechanism we use with our MMU to implement this.

Physical Memory is divided into fixed size frames. Virtual Memory is divided into fixed size pages. Each page is the same size as a frame. (avg size 4KB)

Extra

- Attempts to read a page which doesn’t have correct permissions set will result in a page fault

- Users can see memory data at segment level in the

/proc/PID/mapsfile- read, write, execute, private/shared

- Binary file

/proc/PID/pagemapcontains actual page mappings and metadata- Low 54 bits contain address/swap location, higher bits contain metadata

Page Table:

The actual physical address space of any process can and normally is non-contiguous, and physical memory is allocated to a process whenever the memory is available. This avoids external fragmentation as well as having different sized memory chunks.

Our page table is used to keep track of all free frames, To run a program of size N pages, we have to find N free frames and load the program into them.

These pages still have internal fragmentation

Virtual Address Anatomy

- Split into page number and offset (like segments, only pages are a fixed size)`

+-------------+------------+

| page number | page offset|

+-------------+------------+

| p | d |

| m - n | n |

+-------------+------------+

mis address size,nis page size -pis index into page table,dis offset within page

Essentially: Page Number: address size - page size Page Offset: page size

Page Size

Generally smaller page sizes (around 4KB for Linux on 32-bit ARM), are universally accepted to be better than larger pages as they are small enough to minimize fragmentation, while stlll being large enough to avoid excess overhead for per page metadata.

Arguments are made that we should move to 16KB page sizes to avoid TLB misses and it also doesn’t increase the memory cost by much

Large Pros & Cons:

Cons:

- Time to clear larger pages also causes kernel latency, and if there is less accessed data that needs to be moved it can be very costly in terms of performance

- Larger Pages have higher Internal Fragmentation

Pros:

- Larger pages require fewer TLB slots which is good as they are in high demand in most systems.

- Larger pages are natively supported on ARM

Page Table Structure

In-memory data structure

- Automatically translates from virtual to physical addresses through MMU

- Page table walk

Single-level

- Wastes space

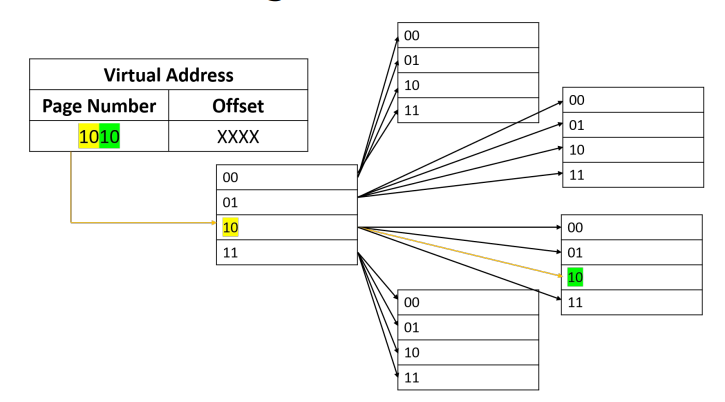

Hierarchical (two-level 32-bit)

- 10-bit first-level page table index

- 10-bit second level page table index

- 12-bit page offset

- Frame numbers concatenated

- N-level

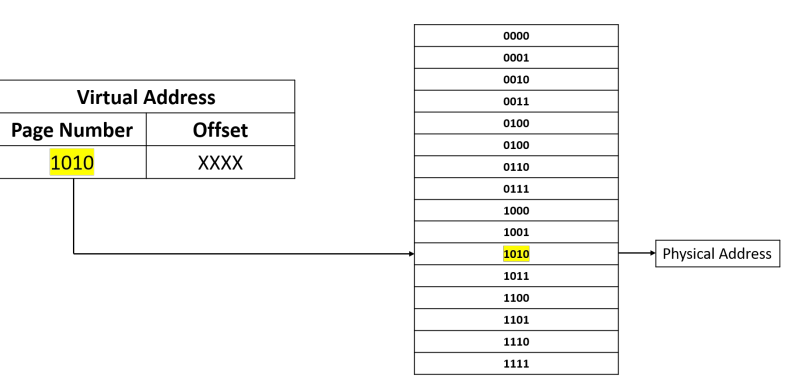

Single Level Page Table

Multi Level Page Table

ARM-based Linux Page Tables

Multi-level hierarchical page table (up to 5 levels)

- Page global directory (PGD) – one per process, base pointer in MMU

- Fourth level directory (P4D) – only in 5-level, not supported on ARM

- Page upper directory (PUD) – in 4- and 5-level, supported on AArch64

- Page middle directory (PMD) – intermediate level table

- Page table entry (PTE) – leaf of page table, multiple pages to frame translation

Structure varies based on Linux version and CPU Architecture: Default 32-bit Raspberry Pi = 2-level

Page Metadata

Page table entries store more than just the virtual → physical address mapping

Also contain important metadata about the page (memory protection, sharing, caching, etc.)

- Represented using individual bits for each attribute

- Lower bits = Physical Address

- Higher bits = Metadata

Memory protection Caching Sharing

| ------------ | --------------------------------------------- | ------------ |

| Macro | Description | Bit position |

| ------------ | --------------------------------------------- | ------------ |

| L_PTE_VALID | Is this page resident in physical memory, or | 0 |

| | has it been swapped out? | |

| ------------ | --------------------------------------------- | ------------ |

| L_PTE_YOUNG | Has data in this page been accessed recently? | 1 |

| ------------ | --------------------------------------------- | ------------ |

| | 4 bits associated with cache residency | 2–5 |

| ------------ | --------------------------------------------- | ------------ |

| L_PTE_DIRTY | Has data in this page been written, so the | 6 |

| | page needs to be flushed to disk? | |

| ------------ | --------------------------------------------- | ------------ |

| L_PTE_RDONLY | Does this page contain read-only data? | 7 |

| ------------ | --------------------------------------------- | ------------ |

| L_PTE_USER | Can this page be accessed by user-mode | 8 |

| | processes? | |

| ------------ | --------------------------------------------- | ------------ |

| L_PTE_XN | Does this page not contain executable code? | 9 |

| | (protection for buffer overflow attaches) | |

| ------------ | --------------------------------------------- | ------------ |

| L_PTE_SHARED | Is this page shared between multiple process | 10 |

| | address spaces? | |

| ------------ | --------------------------------------------- | ------------ |

| L_PTE_NONE | Is this page protected from unprivileged | 11 |

| | access? | |

| ------------ | --------------------------------------------- | ------------ |

Memory Swapping

When a system is allocating memory to a process, it can over-commit and assign more memory than is physically available. The system must then perform memory management to keep necessary processes running. Swapping is the process of moving unrequired pages from RAM to backing storage to free up space for necessary pages.

This is done through persistent storage at a partition or a file in /root called swap space.

Swapping is an effective strategy as processes can still operate with nonessential pages having been swapped out if access to these pages is not required. The OS handles requests to swapped-out (not-resident) pages and updates memory

Page Faults

A page fault occurs when a process fails to access a page. This can occur for several reasons which the handler checks in turn.

- Firstly, the handler checks if the page is within the process’ address space.

- Next, the handler checks if the page allows the requested access mode.

- If both conditions are appropriate, the handler then checks where the quickest place to access the data is and performs the appropriate action to get the page data.

When the fault has been resolved, the process is resumed.

In Linux there is a differentiation between major faults and minor faults.

- Major faults occur when data must be read in from backing storage.

- Minor faults occur when the data is resident but is mapped to a different process.

// Page Fault Detected for address x with access mode // M

(x == invalid address) ? return segfault :

(Mode forbidden at x) ? return segfault :

(data for x in swap) ? swap in page data :

(data for x in a file) ? read in file data :

// Demand Paging

(page at x resident) ? do copy on write : allocate fresh empty page

Working Set Size

The Working Set Size (WSS) of a system is a measure of how many resident pages a process needs to function efficiently

The resident set size (RSS) is the actual number of resident pages for a particular process.

Rules

- RSS < number of pages in virtual address space

- For a process to run effectively, RSS >= WSS

Note: Temporal locality: pages that were recently used are likely to be used again

Demand Paging In-memory Caches Translation lookaside buffer (TLB) Page Replacement Policies