The first thing to discuss is the difference between testing and code analysis techniques. You will have already seen a lot of information about quality assurance through testing in earlier courses, in particular the Object Oriented Software Engineering, covering concepts like unit testing, defects and mocking. • Automated code analysis plays a distinct role from testing within quality assurance. • In testing, a test that describes a desired behaviour about a specific software artifact within the application, such as an object, is written by the developer. The developer has to decide what behaviour the object should perform and what the expected outcome might be, based on their understanding of the requirements for the application. and express this in the test through method invocations and then assertions. Violations of the assertions indicate that the tested code contains a bug. • In automated code analysis, application code is compared to global rules about the structure and nature of good quality software. The developer doesn’t need to decide what these rules are before the analysis is performed, although sometimes the rules are expressed as thresholds that can be configured by the developer. Violations of the rules indicate that the code contains a bad smell, suggesting the way the code is written or organised could be improved. Empirical research has shown that code that contains bad smells is more prone to bugs, because the bugs are harder to detect. However, because the automated analysis is not specific to the target application, a developer needs to evaluate the warnings reported to decide if they really do indicate something that needs to be corrected. • At the end of the lecture, we’ll also see how automated code analysis can be applied to testing.

Use Cases

• Individual: Developers can check changes before submission to review. • Team: CI pipelines can block merge requests that fail agreed standards. • Prevents defects entering the mainline • Optimises code review process • Assists reviewers in assessing proposed changes.

• Automated code analysis is an important tool in a software engineering workflow for both individual developers and teams. • Analysis tools can detect a wide variety of bad smells that may cause defects. These can help individuals improve their code prior to submission to a review process. Perhaps more importantly, teams can agree quality assurance standards and express these through automated checks. These checks can be integrated into a continuous integration pipeline to prevent bad smells and possibly defects being introduced into the code base. This reduces the effort involved in undertaking code reviews, since code should already meet agreed standards and reviewers can focus on more substantial issues, e.g. missed opportunities to reuse existing code, or apply refactorings that migrate code towards design patterns.

Static vs Dynamic Analysis

• Static measurement is applied to the source code or compiled application code at rest, i.e. by inspecting the structure and content of the program files, either source code or compiled binaries. For example, the number of import statements in a class file gives a measure of how coupled that class file is to other classes in the application. Static analysis sometimes depends on intermediate compiled representations of programs, such as abstract syntax trees, since these may dispense with unnecessary detail, such as code comments. • Dynamic measurement is applied during execution. For example, the amount of time an application thread spends inside a class, method or object can provide information about the amount of functionality provided by different parts of an application. Dynamic measurement is dependent on the specification of appropriate test scenarios in which to evaluate a program. • Confusingly, some measurements with the same name can be taken both statically and dynamically. However, they are usually measuring different things and will inform us about different characteristics in the code. • For example, the number of method calls from one class to another can be measured statically (by counting the number of unique contexts in which a method is referenced in the source code) or dynamically by counting all invocations of a method that occur during program execution. Note, however, that these two measures are telling us different things. Dynamically, this can indicate where performance optimisations might have the greatest benefit. Statically, this tells us whether a change to the code might be brittle. • This lecture will cover examples of both static and dynamic analysis and will look at more examples of dynamic analysis in the lecture on software architecture.

Opinionation of Testing

• The diagram shows the spectrum of opinionation of different kinds of automated analyses that can be performed on a program. • Opinionation is the strictness of the output from an automated analysis. The more opinionated an analysis, the more likely the team will need to correct rather than ignore the reported warning. • The most extreme case of opinionation show is compiler or interpreter syntax errors. If the program cannot be understood by the compiler then no binary will be produced and it can’t be executed. • Conversely, design metrics need careful interpretation and are often most useful for informing code reviews, rather than being used prescriptively. • Most automated analysis sits somewhere in the middle between completely prescriptive and completely subjective. Warnings from these tools, including linters and design metrics don’t have to be fixed in order for the program to at least execute, but doing so is likely to improve the quality of the code and enhance long term maintainability. The less opinionated an automated analysis is, the more likely it is that it can be configured or tuned so that it doesn’t generate warnings the development team don’t feel are relevant to them. For example, a code-style linter might be configured to avoid some source code files that are automatically generated, since the team will be unlikely to edit these files directly themselves. • In some ways, you can think of code review as being on the far right of the spectrum. Done well, it should focus on high level considerations, informed by design metrics, that are more subjective.

Linting

Agreeing consistent code style standards for a software project is important for improving readability. This is exactly the same as for publishers of natural language documents, such as news sites, newspapers and technical books. These organisations have extensive rules for writing style for their authors. Writers must say things a certain way to ensure consistency across outputs. This makes it easier for readers to comprehend the content. • The same is true for source code: having a consistent style across a code base contributed to by different developers makes the code much easier to read and understand. Resolving bugs and adding new features is much easier when code is written consistently because developers will be able to predict where work will need to be done and how it will need to be written. • Code standards therefore strongly contribute to the idea of self documenting code. There is a temptation when code is hard to read, inconsistent and complex to supplement it with comments to explain the purpose and implementation. This is unfortunate because as the code is evolved the comments will need to be updated, which often doesn’t take place. It is generally better to write code that is sufficiently clear and readable that the need for any supplementary comments is eliminated or at least minimised. Coding standards help with this, because they provide strong direction for both writers and readers of code (both parties have some idea what to expect). • Where possible, coding standards should be based on agreed language standards. This makes it easier for new developers to join projects because they don’t have to learn an idiosyncratic style. Ask your coach for advice on how to choose a Linting tool suitable for your project and integrate it into your continuous integration pipeline.

Code Style

Linting tools can check for a wide variety of style rules that can enhance readability. I’ll use the PEP8 standard to give some examples here, but much of the advice applies to any programming language. • Most style guides will let a limit for the length of a line of code in a file. This is because the longer a line is the further the eye has to track across the screen to read the statement. Historically style guides set this limit to 80 characters, purely because this was the visual limit of the screen itself. More recent guides suggest limits of 120 characters. • The dimensions of source code layout is important in other ways. For example, having consistent spaces around operators in an expression can make them easier to read. Adding extra space can lead to misinterpretation. In the example, a reader might be tempted to think that the addition operator is applied before the exponent because it is visually further away. Similarly, placing a blank line between logical chunks of code in a function organises the code into paragraph like chunks, making the purpose easier to comprehend. This also makes spotting refactoring opportunities easier, because you can more easily see blocks of code that could be extracted to their own methods. • Naming conventions help readability by making the purpose of variables and functions easier to predict. The format of the name is usually dictated by the language style guide. For example, Python uses snake case for methods and variables and camel case for classes. Using literal names that describe data content for variables or behaviour for methods is preferred because this makes the purpose explicit. Historically, programmers used acronyms or single characters for variable names for brevity due to the limits of the technology they were working on, as well as the background of our discipline in mathematics. The purpose of an identifier would be documented in comments. However, this isn’t necessary anymore and creates the risk of disconnection between the program and the documentation. • You may not think spelling is too important for variable names in programs as long as the spelling is consistent. However, bad spelling is important for two reasons. First it creates ambiguity, similar to obscure identifier names. Perhaps the author in the example here is referring to the German composer rather than a file handle? Second, poor spelling can be a source of bugs, particularly in dynamic languages like Python. A variable might be initialised with one name and then assigned a new value with another. • Style guides should also specify rules for other types of layout, such as parentheses and control structures. For example, the Python guide generally encourages the removal of parentheses in expressions where they are not required. Conversely, in Java, most guides encourage the explicit use of curly braces to enclose the body of a control structure even if the structure contains only one line of code.

Bug Detection Ex

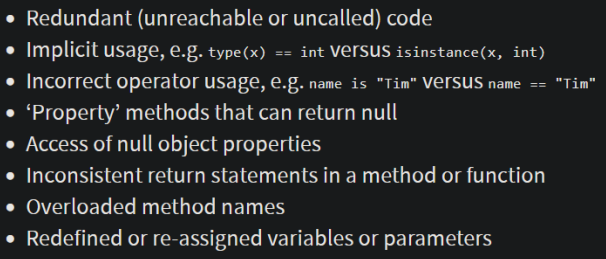

Beyond readability and poor style, static analysis can also identify likely potential bugs from poor usage patterns. Examples are: • Similarly, some tools check for unreachable or uncalled code, since this may be unintended or code that is no longer needed and should be deleted. Unreachable code is easier to detect, since there is no possible path through the code base that would result in its execution (code in a function immediately after a return statement is the most obvious example). Uncalled code is code that could be used, perhaps by a dependent, but doesn’t appear to be. Consider, for example a Java unit test class that contains a public, but uncalled utility method. If the method has no apparent use in the test case it should perhaps be deleted, since a test class shouldn’t have any dependents. However, it may be that the method is used and should be refactored by being moved into its own utility class. • Some tools can also check for incorrect uses of operators. For example, the “is” operator compares object identity (is the object referenced by two variables the same), whilst the == operator tests for equality - are the values of the two objects equal. This can be a source of bugs. • In appropriate handling of null references can often be detected by automated analysis. Null references are often the result of incorrectly initialised variables. This might happen if initialisation of a variable depends on external factors that might fail, such as a network request. The analysis tool can warn the developer that they haven’t handled for a situation in which the network call failed and thus the variable wasn’t properly initialised before being used. • Inconsistent return statements are a particular problem in dynamically typed languages such as Python, where the return type isn’t guaranteed at invocation of a function. This can make handling of the return type difficult. Similarly, it can be tempting to reuse the same variable identifier but for different purposes and containing different kinds of data.

Test Code Coverage

Measured during test suite execution: effectiveness = unique LoC executed / total LoC efficiency = total LoC / (total LoC + LoC executed count)

Granularity:

- Lines of code

- Statements

- Expressions

Mutant Testing

Mutation testing is a method used to evaluate the quality of software tests. It involves modifying the software’s source code in various ways to ensure the tests can detect these changes. Here’s an explanation of key terms associated with mutation testing:

(i) Mutation

In the context of mutation testing, a mutation refers to a deliberate and small change introduced to the source code of a program. This change is meant to simulate an error or fault. The purpose is to create a slightly altered version of the program, which helps to check whether the existing test suite can identify errors and failures effectively.

(ii) Mutant

A mutant is the version of the software that results from applying a mutation. Essentially, it is a new version of the program with a single small modification (mutation) intended to mimic a potential fault. The effectiveness of a test suite is measured by its ability to detect and fail these mutants, which demonstrates its capability to catch similar real-world errors in the code.

(iii) Survivor

A survivor in mutation testing is a mutant that passes all the test cases, meaning the test suite was unable to detect the injected fault. The presence of survivors indicates potential weaknesses in the test suite’s coverage or sensitivity to detecting certain types of errors. Identifying and analyzing survivors help improve the test suite by adding new tests specifically aimed at catching the undetected changes.

(iv) Effectiveness

The effectiveness of mutation testing is measured by its ability to identify weaknesses in the test suite. It is quantified by the proportion of mutants that are successfully detected (killed) by the test suite. A higher percentage of killed mutants indicates more effective testing. Effectiveness helps developers understand how well their test suite can catch faults and where it may need improvement. It essentially reflects the thoroughness of the testing in finding real faults in the software.

(v) Efficiency

Efficiency in mutation testing refers to the practical aspects of performing mutation testing considering the resources and time consumed. Since generating, running, and evaluating mutants can be resource-intensive and time-consuming, efficiency looks at how well the mutation testing process is optimized. This includes considerations such as the speed of mutant generation, the execution time of tests against mutants, and the overall impact on the development cycle. Efficient mutation testing strategies ensure that the benefits of identifying test suite weaknesses outweigh the costs in terms of computational resources and time.

Types of Mutations

There are many different types of mutation operation, including replacing: • conditional operators with their boundary counterparts • infix mathematical operators with other operations • member field values with default or other values • variable values with default values • constructor calls with null assignments • returned values with default values • method call results with default values.

Mutation Testing Metrics

Once all the mutants have been tested, metrics for both effectiveness and efficiency can be calculated. mutant survival rate = # survivors / all mutants efficiency = killed mutants / failed tests