Container orchestration automates the deployment, management, scaling, and networking of containers. As applications grow in complexity and scale, manually managing individual containers becomes impractical, making orchestration essential for production container deployments.

What is Container Orchestration?

Container orchestration refers to the automated arrangement, coordination, and management of containers. It handles:

- Provisioning and deployment of containers

- Resource allocation

- Load balancing across multiple hosts

- Health monitoring and automatic healing

- Scaling containers up or down based on demand

- Service discovery and networking

- Rolling updates and rollbacks

Why Container Orchestration is Needed

Challenges of Manual Container Management

- Scale: Managing hundreds or thousands of containers manually is impossible

- Complexity: Multi-container applications have complex dependencies

- Reliability: Manual intervention increases the risk of errors

- Resource Utilization: Optimal placement of containers requires sophisticated algorithms

- High Availability: Fault tolerance requires automated monitoring and recovery

Benefits of Container Orchestration

- Automated Operations: Reduces manual intervention and human error

- Optimal Resource Usage: Intelligent scheduling of containers

- Self-healing: Automatic recovery from failures

- Scalability: Easy horizontal scaling

- Declarative Configuration: Define desired state rather than imperative steps

- Service Discovery: Automatic linking of interconnected components

- Load Balancing: Distribution of traffic across container instances

- Rolling Updates: Zero-downtime deployments

Core Concepts in Container Orchestration

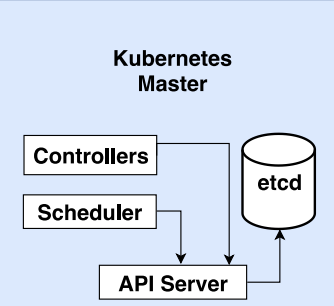

Master

- Collection of processes managing the cluster state on a single node of the cluster

- Controllers, e.g. replication and scaling controllers

- Scheduler: places pods based on resource requirements, hardware and software constraints, data locality, deadlines…

- etcd: reliable distributed key-value store, used for the

cluster state

Cluster

A collection of host machines (physical or virtual) that run containerized applications managed by the orchestration system.

Node

An individual machine (physical or virtual) in the cluster that can run containers.

Container

The smallest deployable unit, running a single application or process.

Pod

In Kubernetes, a group of one or more containers that share storage and network resources and a specification for how to run the containers.

Service

An abstraction that defines a logical set of pods and a policy to access them, often used for load balancing and service discovery.

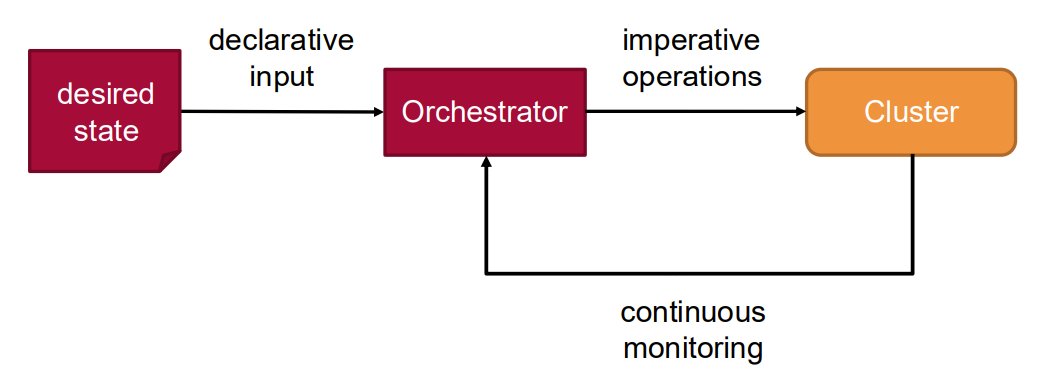

Desired State

The specification of how many instances should be running, what version they should be, and how they should be configured.

Reconciliation Loop

The process by which the orchestration system continuously works to make the current state match the desired state.

Key Features of Orchestration Platforms

Scheduling

- Placement Strategies: Determining which node should run each container

- Affinity/Anti-affinity Rules: Controlling which containers should or shouldn’t run together

- Resource Constraints: Considering CPU, memory, and storage requirements

- **Taints and Toler