Short for Transmission Control Protocol TCP Congestion Control

Also note: Transport Layer Security (TLS)

Abstract

TCP is a type of Transport Layer Protocol that focuses on reliability of data transfer, though this can lead to higher latency of signal.

In general TCP (TCP-IP) is the preferred Transport Layer Protocol over UDP

Known for:

- Reliable, ordered, byte stream delivery service running over IP

- Lost packets are retransmitted; ordering is preserved; message boundaries are not preserved

- Adapts sending rate to match network capacity congestion control

- Adds port number to identify services

- Used by applications needing reliability default choice for most applications

Observation

Links are now fast enough that the round trip time is generally the dominant factor, even for relatively slow links. The best way to improve application performance is usually to reduce the number of messages that need to be sent from client to server. That is, to reduce the number of round trips. Unless you’re sending a lot of data, increasing the bandwidth generally makes very little difference to performance.

Structure

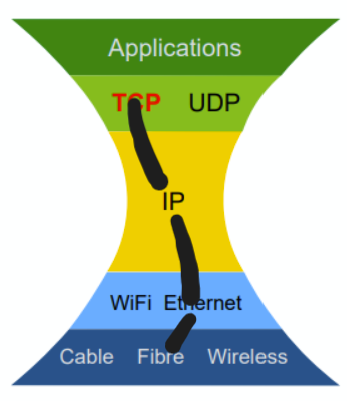

TCP segments are carried as data within IP Packets IP packets are carried as data in Data Link Layer Frames Data Link Layer frames are delivered over Physical Layer

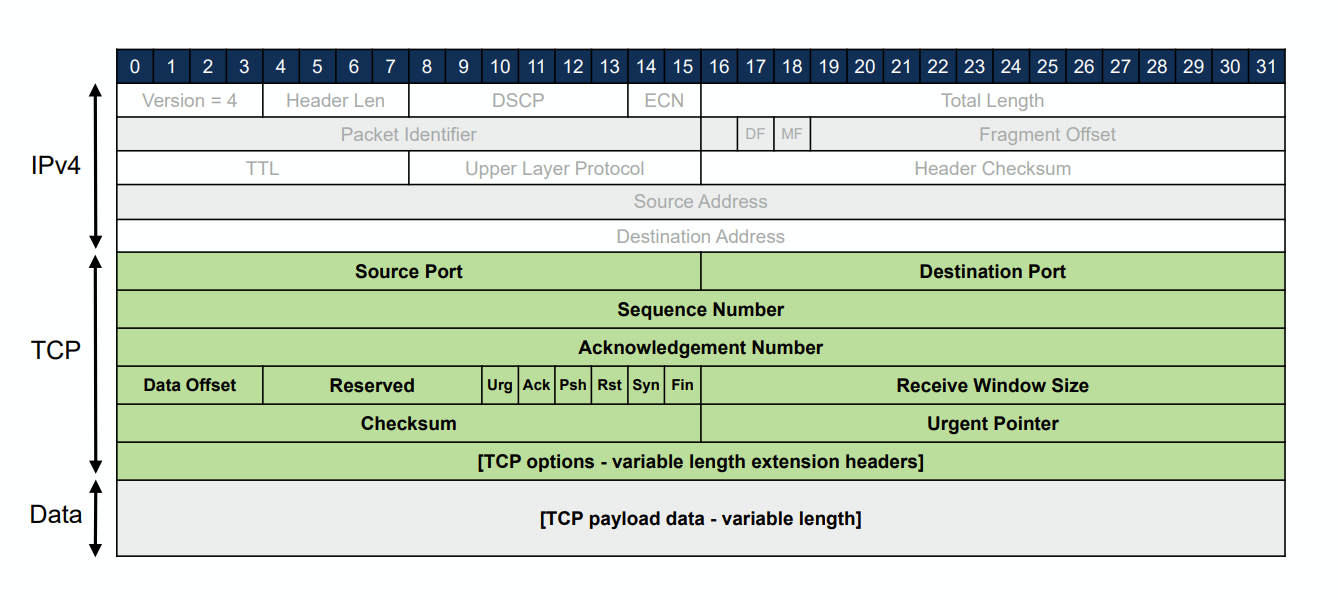

Segment Format

Definitions:

- Source Port

- The port the message originates from

- Destination port The port the message is to be delivered to

- Sequence Number

- Acknowledgement Number

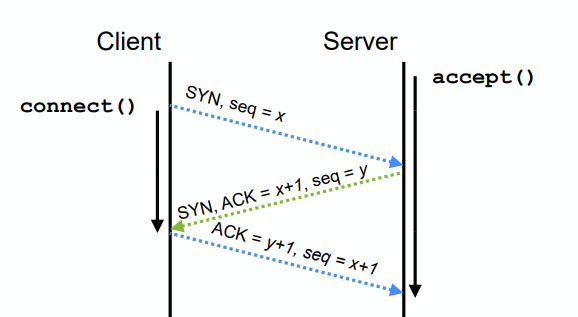

Client-Server Connection Establishment

The Client and the Server perform a SYN - SYN-ACK - ACK

This is in response to accept() and connect() calls

Specifically:

- The server calls

accept()and waits - The

connect()call triggers the three-way handshake:- Client → Server:

SYN(“synchronise”) bit set in TCP header- Client’s initial sequence number chosen at random

- Server → Client:

SYNACKfor client’s initial sequence number- Server’s initial sequence number chosen at random

- Client → Server:

ACKfor server’s initial sequence number

- Client → Server:

- When handshake completes, connection is established

SYN-ACK

Abstract

This “Handshake” is in general the backbone of a TCP connection… //** TODO

Example Timeline:

Latency Impact on Performance:

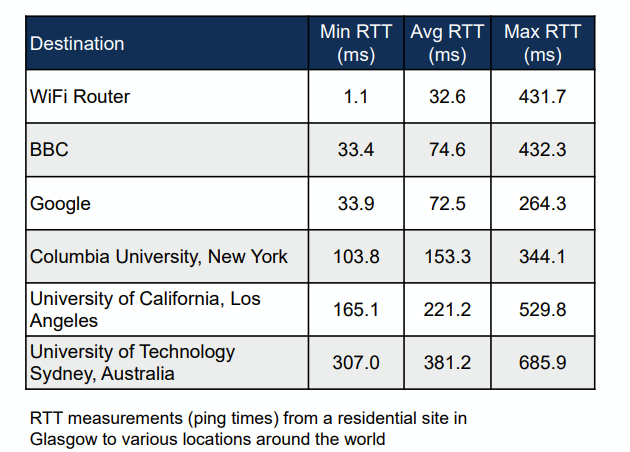

What is the typical RTT? :

- Depends on Distance

- Depends on Network Congestion

Typical Bandwidths:

- ADSL2+ ⇒ 25MBps

- VDSL ⇒ 50MBps

- 4G Wireless ⇒ 15-30MBps

LATENCY HURTS PERFORMANCE

- Connection Establishment is slower

- Retrieving Data takes longer

TLS makes this worse As RTT increases, benefits of increasing Bandwidth reduces

Discussion

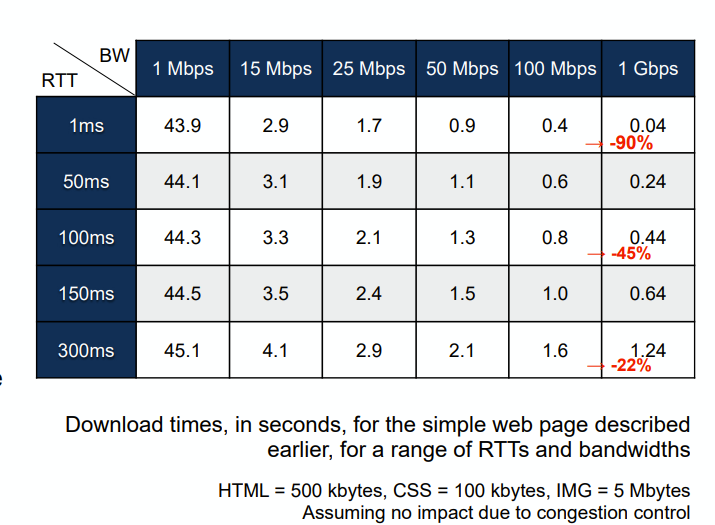

What’s interesting is how the download time varies as the link speed improves. If we look at the top row, with 1ms round trip time, we see that if we increase the bandwidth by a factor of ten, from 100Mbps to 1Gbps, the time taken to download the page goes down by a factor of ten, a 90% reduction. The link is ten times faster, and the page downloads ten time faster. If we look instead at the bottom row, with 300ms round trip time, increasing the link speed from 100Mbps to 1Gbps gives only a 22% reduction in download time. Other links are somewhere in the middle. Internet service providers like to advertise their services based on the link speed. They proudly announce that they can now provide gigabit links, and that these are now more than ten times faster than before! And this is true. But, in terms of actual download time, unless you’re downloading very large files, the round trip time is often the limiting factor. The download time, for typical pages, may only improve by a factor of two if the link gets 10x faster. Is it still worth paying extra for that faster Internet connection?

Reliable Data Transfer with TCP

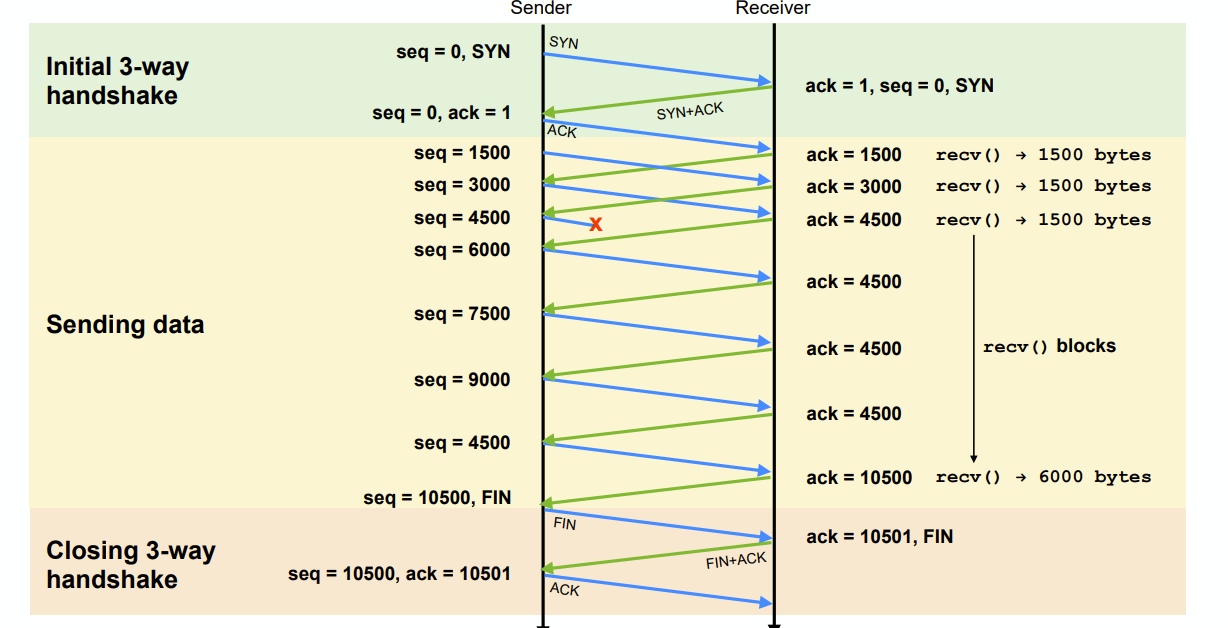

TCP ensures data delivered reliably and in order

- TCP sends acknowledgements for segments as they are received; retransmits lost data

- TCP will recover original transmission order if segments are delayed and arrive out of order

TCP Code:

The send() function transmits data

- Blocks until the data can be written

- Might not be able to send all the data, if the connection is congested

- Returns actual amount of data sent

- Returns -1 if error occurs, sets errno

The recv() function receives data

- Blocks until data available or connection closed;

reads up to BUFLEN bytes

- Returns number of bytes read

- If connection closed, returns 0

- If error occurs, returns -1 and sets errno

- Received data is not null terminated

- This is a significant security risk

Each send() call queues data for transmission, which is then split into segments, each placed in a TCP Packet

TCP Packets are sent inside IP Packets, when allowed to by the TCP Congestion Control algorithm

-

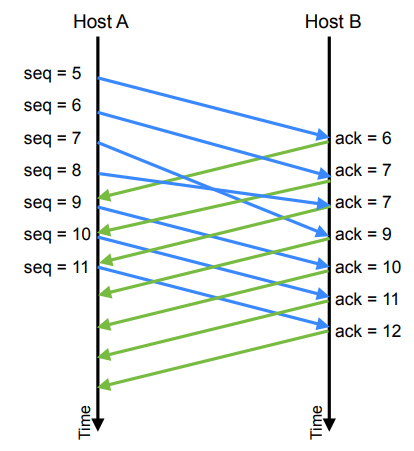

Each segment has a sequence number

-

Sequence numbers start from the value sent in the TCP handshake

- Init sequence is sent in first packet, i.e. the SYN packet

- First data packet has seq number one higher than the init packet

- every other packet increases the seq number by the number of bytes of data sent

Separate Sequence Numbers in each Direction

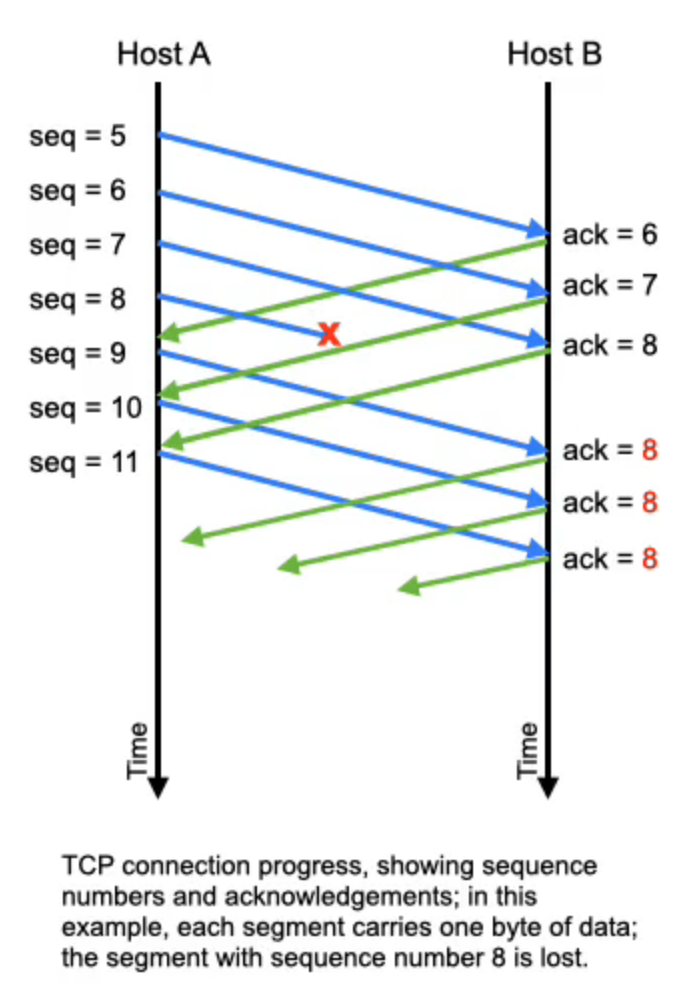

TCP Acknowledgements

- TCP receiver acknowledges each segment received

- Each TCP segment has sequence number and acknowledgement number

- Segments sent to acknowledge each received segment – contains acknowledgment number indicating sequence number of the next contiguous byte expected

- Includes data if any ready to send, or can be empty apart from acknowledgement

- If a packet is lost, subsequent packets will generate duplicate acknowledgements

- In example, segment with sequence number 8 is lost

- When segment with sequence number 9 arrives, the receiver acknowledges 8 again – since that’s still the next contiguous sequence number expected

- Can send delayed acknowledgements

- e.g., acknowledge every second packet if there is no data to send in reverse direction

Loss Detection

TCP uses acknowledgements to detect lost segments

Case 1:

- If data is being sent, but no acknowledgements return, then either receiver or the network failed

- TCP treats a timeout as an indication of packet loss and retransmits unacknowledged segments

Case 2:

- Duplicate acknowledgements received → some data lost, but later segments arrived

- TCP treats a triple duplicate acknowledgement – four consecutive acknowledgements for the same sequence number – as an indication of packet loss

- Lost segments are retransmitted

Triple Duplicate Acknowledgement

Why a triple duplicate acknowledgement?

- Packet delay leading to reordering will also cause duplicate acknowledgement to be generated

- Gives appearance of loss, when the segments are merely delayed

- Use of triple duplicate ACK to indicate packet loss stops reordered packets from being unnecessarily retransmitted

- Packets delayed enough to cause one or two duplicate acknowledgements is relatively common

- Packets being delayed enough to cause three or more duplicates is rare

- Balance speed of loss detection vs. likelihood of retransmitting a packet that was merely delayed

Ex:

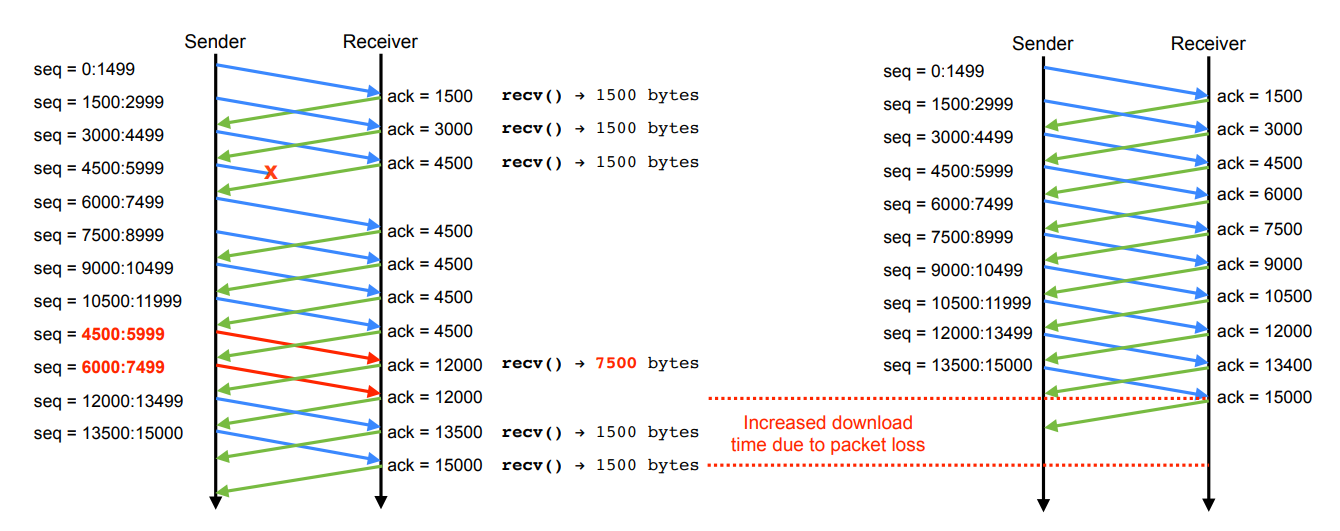

Head Of Line Blocking

Head-of-line blocking increases total download time

Delay depends on relative values of RTT and packet serialization delay

Head-of-line blocking increases total download time

Delay depends on relative values of RTT and packet serialization delay

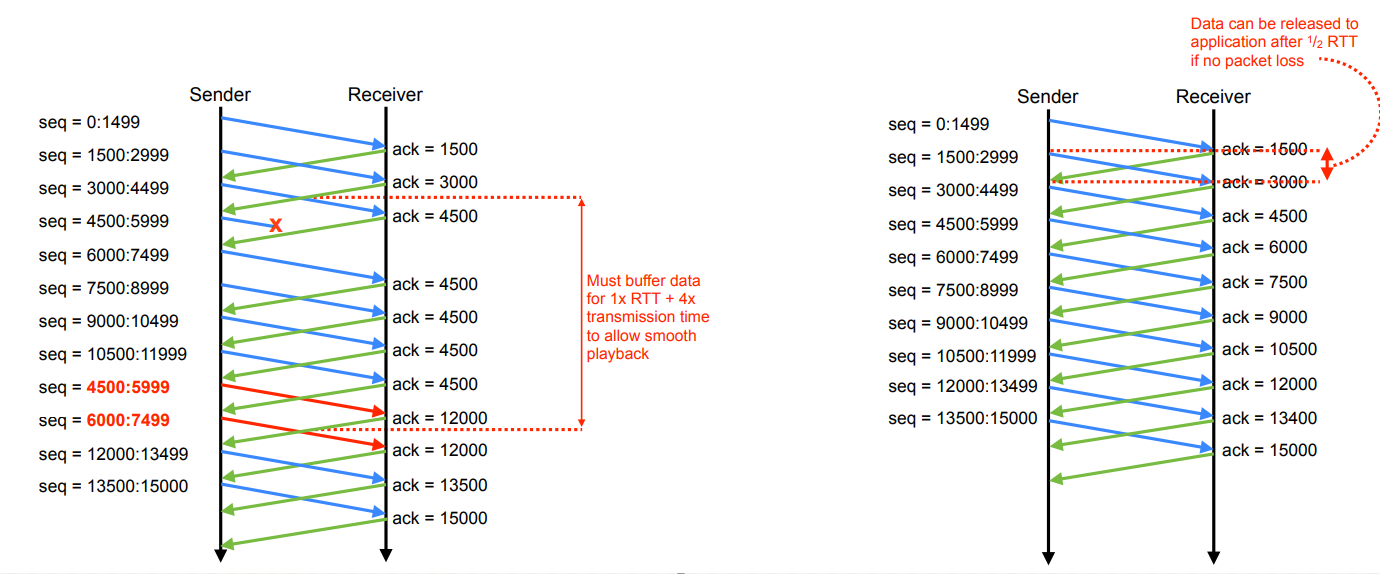

Head-of-line blocking increases latency for progressive and real-time applications

Significant latency increase if file is being played out as it downloads

Head-of-line blocking increases latency for progressive and real-time applications

Significant latency increase if file is being played out as it downloads